Interviews

Experiencing Disabilities Through a Developmental Disorder Mixed Reality System (Part 2)

Eiichi Miyazaki

Using discomfort to help show the difficulties in disability

Interviewer & Japanese Writer: Yamamoto Takaya; Translation & Editing: Matthew Cherry

Eiichi Miyazaki was selected for the 2020 INNO-vation Program’s Disruptive Challenge thanks to his mixed reality (MR) system that lets users experience the difficulties those with developmental disorders face in their everyday lives. When he was selected, he immediately faced a huge hurdle: his challenge was only an idea. He had conceptualized the system, but there was no actual device yet.

That’s when he began development on a system that filmed 4K images from a 360-degree perspective. This film would then be processed on a computer and displayed in a VR headset, letting users experience a similar view to those with visual impairments.

The difficult part for Miyazaki was figuring out exactly how to replicate that visual aspect. Through his preliminary research, he discovered that those with visual developmental disorders often experienced double vision or strong levels of contrast. Knowing this was helpful, but it still left a lot to interpretation when considering how close replicating those phenomena would be to the real thing. That’s when he began improving his research by soliciting advice from people who support those with developmental disorders.

This image shows a point-of-view when there is no movement detected.

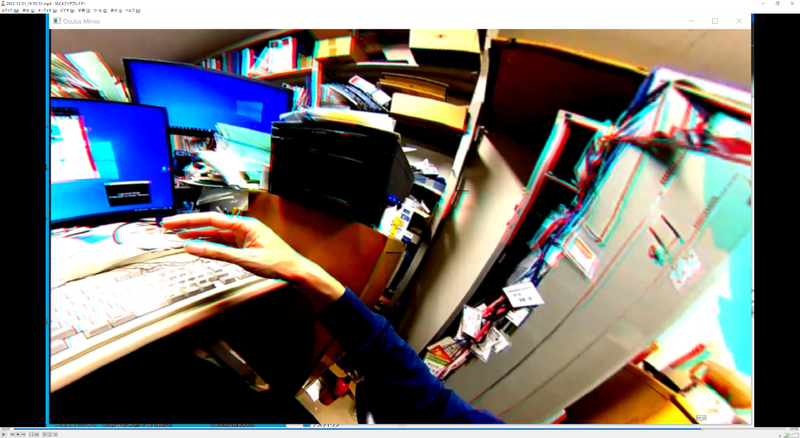

Notice the changes in color and focus when motion is detected in the hand.

Thanks to the advice he received, he was able to continue his research and ultimately finished creating his MR system. It was a device that utilized a headset as its main component, letting users simulate what those with developmental disorders experience.

“I had people try the system out for themselves, and they often told me that it was difficult to look at, or that it was bad for their eyes,” Miyazaki shared. “But that’s exactly what people with developmental disorders have to live with 24/7. I think experiencing this will help bring familiarity towards those with developmental disorders.”

The MR system can also be interactive, as images distort in response to sound levels in the environment. By simply changing the program, the experience can be altered to simulate those with noise sensitivities.

“Many people with developmental disorders start to grow anxious or panic when around a large number of people. If we could use image recognition to calculate the number of people in the vicinity and display images in the headset, we could replicate what it’s like for those people as well. Although I only used sight and sound in this research, I think scent could be utilized, too. That would expand the types of simulations we could produce,” Miyazaki said.

In Part 3, we ask Miyazaki about his activities beyond the INNO-vation Program.

Continued in Part 3 (Coming January 2nd, 2023)